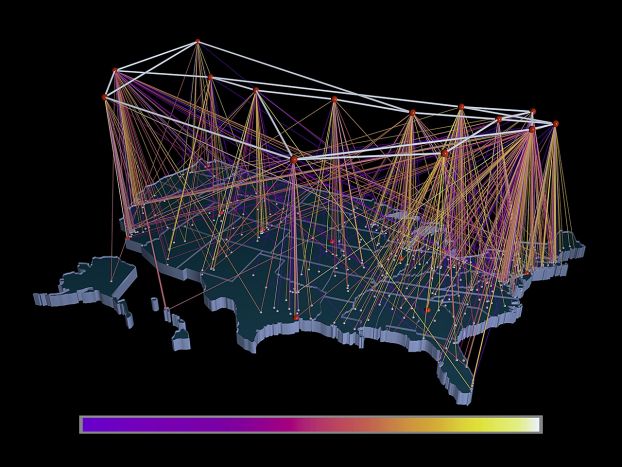

Credit: Texas Advanced Computing Center

What is a supercomputer?

The term "supercomputer" typically applies to the world's fastest computers at any given time. Most modern supercomputers are systems of interconnected computers or processors that run different parts of the same program simultaneously, performing complex operations at exceptionally fast computing speeds.

Supercomputer speeds are measured in floating-point operations per second (FLOPS), or calculations per second. As technology advances, the list of supercomputers constantly changes. In the 1970s, supercomputers reached 160 megaflops; today, they perform quadrillions of flops (petaflops) — one million times more powerful than the fastest laptop.

Powering up

The first commercially available supercomputer, the Cray-1, went into production in 1977 at the NSF-supported National Center for Atmospheric Research (NSF NCAR). Its processing speeds of around 160 megaflops — now outpaced by even the most basic modern laptops by several orders of magnitude — made it the world's fastest supercomputer at that time.

The Cray-1 became an essential research tool for weather forecasting, fluid dynamics and materials science. Its unprecedented speed set a new standard for supercomputing.

After it was decommissioned, it was donated to the Smithsonian National Air and Space Museum, which maintains it as part of its permanent collection.

Credit: University Corporation for Atmospheric Research/UCAR

Credit: Donna Cox and Robert Patterson, courtesy of the National Center for Supercomputing Applications (NCSA) and the Board of Trustees of the University of Illinois

Superinfrastructure

Throughout the 1980s and 1990s, NSF programs like Supercomputer Centers and the Partnership for Advanced Computational Infrastructure provided researchers nationwide with supercomputing resources to perform ever-more-advanced research and technological innovation.

These efforts also laid the groundwork for modern high-performance computing, enabling faster, more efficient and user-friendly systems with real-world uses — from genome mapping and drug discovery to weather forecasting, aircraft design, special effects in films, personalized e-commerce recommendations and training artificial intelligence-powered chatbots like ChatGPT.

Some key technological advancements brought about by NSF funding include:

- Launching NSFNET in 1986 to connect researchers with NSF supercomputer centers and serving as the internet's backbone until the mid-1990s. Learn more about NSF's investments in the internet.

- Advancing parallel computing architectures that use many processors simultaneously to solve complex problems faster and more efficiently, like forecasting weather patterns or analyzing medical data.

- Developing universal file formats to standardize data sharing, improve systems compatibility and reduce the risk of data loss due to outdated software or hardware.

- Enhancing data visualization for interpreting large datasets, such as 3D models of wind velocities in thunderstorms and simulations of human blood circulation.

- Creating user-friendly desktop software to help users organize, access and navigate digital information.

Discoveries at warp speed

Through programs like the NSF Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support, NSF connects researchers to supercomputer resources, enabling breakthroughs such as:

Wildfire risks to human health

Using the Cheyenne supercomputer at the NCAR-Wyoming Supercomputing Center, researchers found that larger, more intense wildfires in the Pacific Northwest have shifted North America's air pollution patterns, causing a late summer spike in harmful pollutants across the continent.

Advancing preeclampsia research

Simulations on the Expanse supercomputer at the San Diego Supercomputer Center revealed key genetic biomarkers indicative of preeclampsia complications, which affect roughly 1 in 25 U.S. pregnancies, paving the way for earlier diagnosis and improved treatments.

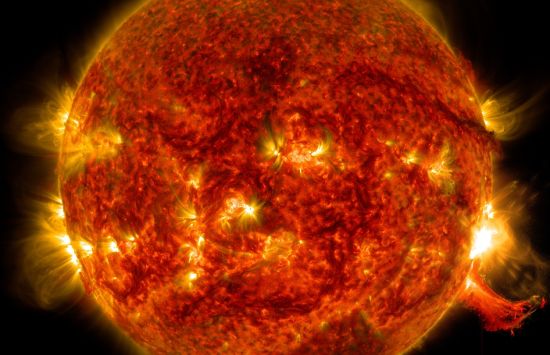

Space weather prediction

In 2021, scientists used TACC's Frontera supercomputer to improve space weather forecasting, helping to protect power grids and communication satellites.

Earthquake risk prediction

Using the Frontera supercomputer at TACC, researchers trained machine learning models to predict areas and structures most at risk of collapse after an earthquake, advancing emergency response and recovery efforts.

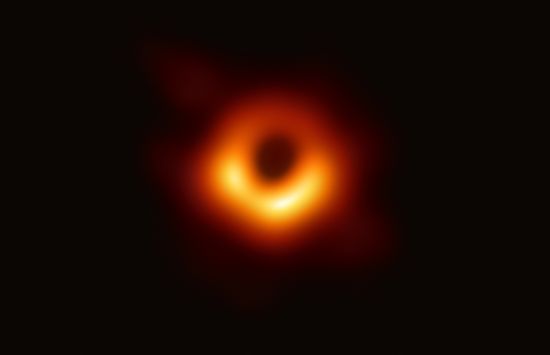

Black hole image capture

Simulations on the Blue Waters supercomputer at the University of Illinois National Center for Supercomputing Applications enabled researchers to capture the first image of a black hole in 2019, reshaping the understanding of these cosmic giants. Learn more about the image.

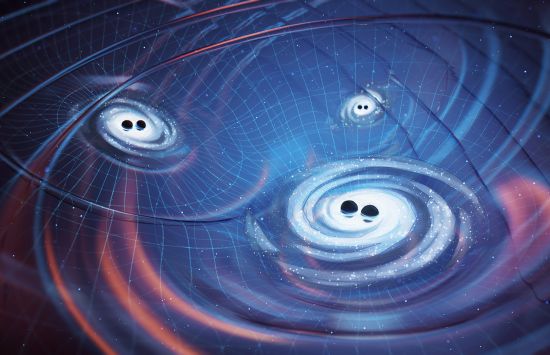

Gravitational wave detection

The supercomputer Stampede at the Texas Advanced Computing Center (TACC) helped confirm the first detection of gravitational waves in 2015 by the NSF Laser Interferometer Gravitational Observatory, earning Rainer Weiss, Barry C. Barish and Kip S. Thorne the 2017 Nobel Prize in physics.